“Who controls the past controls the future. Who controls the present controls the past” is a well-known line from George Orwell's dystopian novel 1984. It is about who gatekeeps information. For the gatekeepers decide who has access to which information and when to get it. These used to be newspapers, then it became search engines, now it is AI models. A few weeks back OpenAI had an update for their 4o model. The idea was to make ChatGPT "more intuitive and effective" in presenting information. The result, however, was a sycophant machine that would please even the most suicidal user.

OpenAI has, of course, rolled back its update but the incident has shown how much influence Big Tech has on what information users get from Generative AI, and how. The heart of AI models is still a black box but that does not mean companies cannot influence or manipulate the outcome of that black box. This influence can be used for good when you try to prevent users to construct a bomb or, ironically, put in place copyright protection. But it can also be used for more nefarious reasons: distorting human thought.

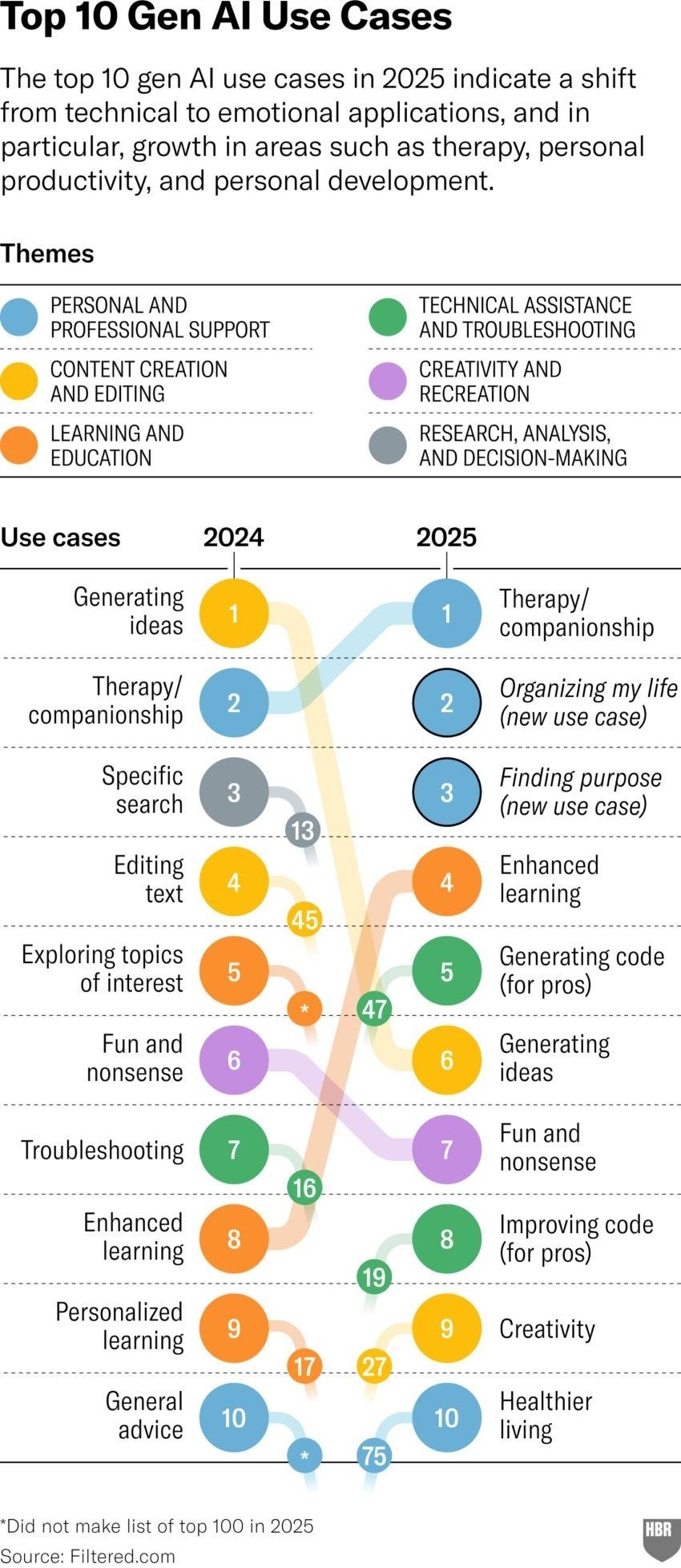

Trying to make AI more human is abusing the need for meaning and support that has been on the rise in our modern society. This year, the most used reason for using Generative AI is 'therapy and companionship', with 'organising my life' at two and 'finding purpose' at three. Anthropomorphising a tool will not solve a social problem. It will only make that tool more gullible to people who are lost.

Mark Zuckerberg disagrees. He thinks that to fight the loneliness epidemic, AI chatbots can be used as an extension of the friend network. Such bots will emulsify with your flesh-and-blood connections. This might, in the future, make you wonder if you are talking to a real person or a manipulative prediction machine. Meta is, of course, not doing this for altruistic reasons. In such conversations users share a lot of personal information, which is Meta’s bread and butter for raking in profit.

But it's not only Meta. In an attempt to make ChatGPT potentially profitable, OpenAI will soon implement shopping information in their apps, just like with Google Search results. OpenAI has said they will bar advertisements, but as OpenAI is still struggling to find money to repay investments, this will be a much needed gold mine for the future. First win people over, then, once they are in, turn the switch. You would get a (mild) sycophant model selling products.

This manipulation will go beyond snake oil merchants. The ties between Big Tech and government have become more intertwined. Since the Trump's second term, Meta and Amazon are rolling back diversity programs and X is becoming more reluctant to deal with racism and other forms of discrimination. Merchants want to sell and will seek ways to make the most money. If the government will give free reign on how Big Tech can use their machines, they will not hesitate to promote that government.

The 'whoopsie' of OpenAI's update of GPT-4o has given us a glimpse into what's ahead. Big Tech is not altruistic. They might have their grand visions of helping humanity, but first and foremost, investments need to be repaid. In doing so they care a lot less about humans, manipulating ideas and decisions to profit more and gain more power. Their machines, Large Language Models, are masters of language and Orwell warns us: "if thought corrupts language, language can also corrupt thought.” We are seeing a dystopia in the making.

Sources:

”OpenAI explains why ChatGPT became too sycophantic” (TechCrunch)

“In Meta's AI future, your friends are bots” (Axios)

“Meta and Amazon scale back diversity initiatives” (BBC)

“OpenAI Adds Shopping to ChatGPT in a Challenge to Google” (Wired)